Key Takeaways

- OpenAI’s DALL-E model can generate unique AI artwork from text captions

- Raspberry Pi’s provide a low cost way to run models locally

- Using a GPU equipped Pi configuration significantly speeds up art generation

- Customizing datasets allows tuning the model to preferred art styles

- Chaining together peripherals can build automated art generator showcases

- Debugging tricks like monitoring system resources help optimize performance

Raspberry Pi’s provide endless opportunities for DIY tech projects. By installing OpenAI’s powerful DALL-E model on your Pi, you can leverage AI to create unique artwork dynamically generated from the text captions you provide.

Table of Contents

Why Run DALL-E on a Raspberry Pi?

Here are some of the key benefits of running DALL-E on a Raspberry Pi at home:

- Convenience – Generate art anytime without relying on internet access. Models run locally for quick results.

- Customization – Craft your own art style by fine-tuning DALL-E models on personal datasets.

- Low Cost – Raspberry Pi’s provide lots of value for money over other hardware options.

- Portability – Compact and portable setup to showcase your AI art generator anywhere.

- Expandable – Pi’s allow addition of peripherals like cameras to create input for the model.

What You’ll Need

Generating AI art on a Raspberry Pi with DALL-E is totally achievable as a home project. Here’s what you’ll need to get started:

- Raspberry Pi 4 (4GB RAM model recommended)

- MicroSD Card (16GB Class 10 minimum)

- Power Supply

- DALL-E model file (see step-by-step guide for download info)

- GPU supporting CUDA (see guide for Nvidia Jetson Nano option)

Short Step By Step Process

Following these steps will have DALL-E running on your Pi generating art in no time:

- Install OS – Flash Raspberry Pi OS (32-bit) to your microSD card.

- Configure OS – Connect peripherals and enable configurations like SSH, SPI, I2C, etc.

- Install Dependencies – Update packages and install python dependencies like torch, pillow and transformers.

- Download DALL-E – Get DALL-E Mega model file trained for captions to image tasks.

- Run DALL-E – Load model file and pass text prompts to generate your AI artwork.

- Refine Process – Fine-tune model on custom datasets or upgrade compute for better results.

Detailed Step-by-Step Instructions

For those wanting specifics, here are more detailed instructions for setting up DALL-E art generation on a Raspberry Pi:

1. Install 32-bit Raspberry Pi OS to MicroSD Card

Use Raspberry Pi Imager and flash latest 32-bit OS to a fast microSD card (16GB+).

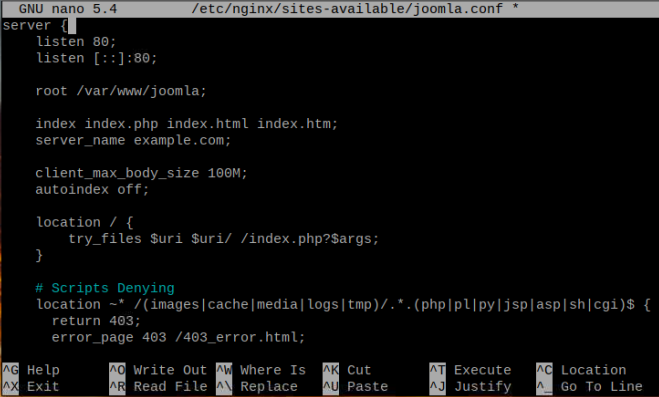

2. Configure Raspberry Pi OS

Connect Pi to monitor, keyboard, mouse, internet etc. Enable SSH, SPI, I2C in raspi-config if you need.

3. Update Package List and Upgrade Packages

Copy code

sudo apt update

sudo apt full-upgrade4. Install Python Dependencies

Copy code

sudo apt install python3-dev python3-venv python3-pip flex bison -y5. Create Virtual Environment

Copy code

python3.9 -m venv DALLE-env

source DALLE-env/bin/activate6. Install PyTorch and Transformers

Copy code

pip install torch==1.8.1+cu111 torchvision==0.9.1+cu111 torchaudio===0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

pip install -U transformers7. Download DALL-E Mega File

Navigate to site with DALL-E Mega model and get model.zip file (around 1.2 GB).

8. Unzip and Place Mega File Locally

Unzip the model file and place locally, e.g. ~/DALL-E-models/model.safetensors

9. Run DALL-E Art Generator Script

Pass text prompts to script which loads model and outputs art.

Copy code

python dalle_art_generator.py "An armchair in the shape of an avocado"And that’s it! Your Raspberry Pi setup should now generate AI art locally with DALL-E. Time to get creative with the text prompts.

Optimizing Performance

To optimize DALL-E on Raspberry Pi for better performance you can:

- Use a Raspberry Pi 4 with 8GB RAM model

- Attach heatsinks or fans for cooling

- Use a fast MicroSD storage card for better IO

- Minimize background OS processes

- Lower image resolution returned by model

- Simplify text prompts to reduce model load

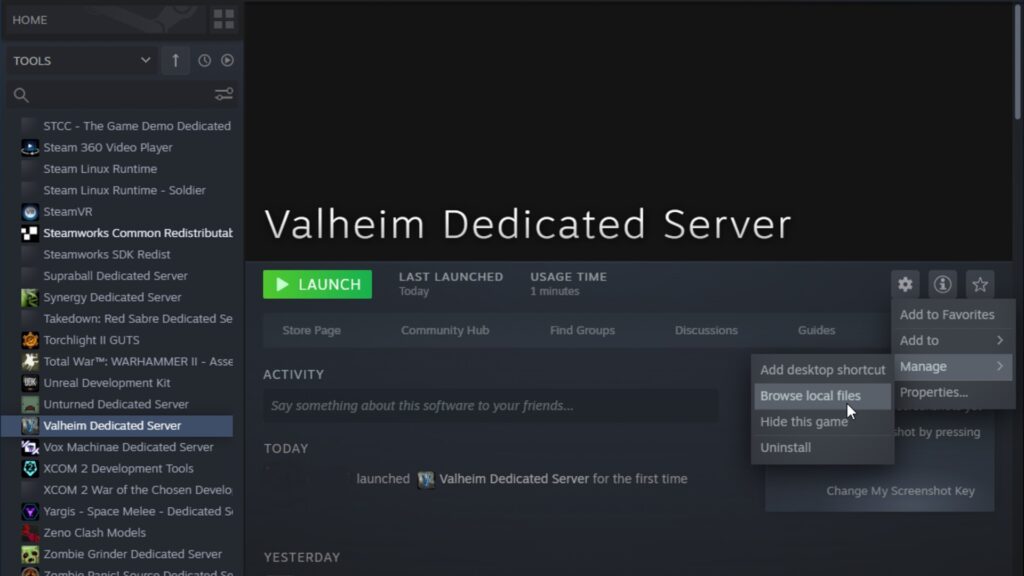

For more advanced use, switching to GPU equipped hardware like the Nvidia Jetson Nano can drastically improve inference time thanks to the CUDA cores for parallel processing.

Advanced Configurations

More advanced setups are possible too by chaining together inputs and outputs with your DALL-E art generator Raspberry Pi:

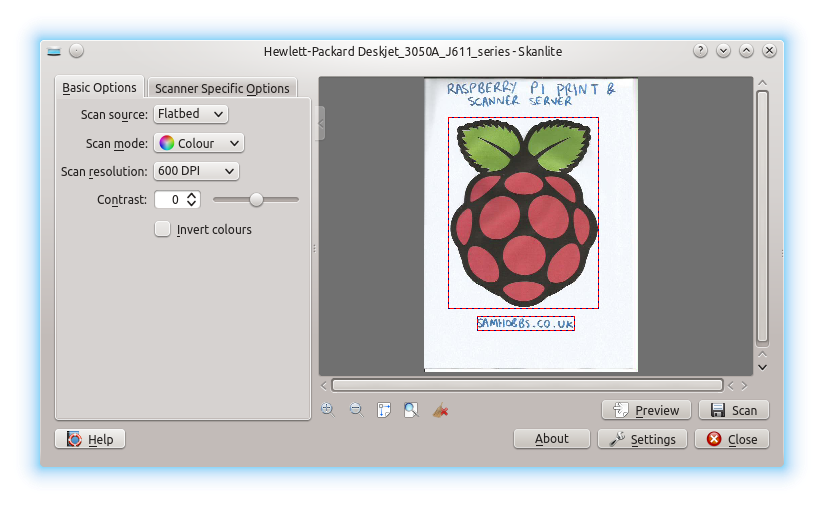

- Attach camera modules to have custom inputs

- Connect displays to showcase generated art automatically

- Build a tablet based interface for portable interaction

- Link up Arduino sensors to alter generative art based on environment

- Containerize model server for easy redeployment anywhere

Raspberry Pi’s infinite flexibility makes all this possible for DIY enthusiasts wanting to build custom AI powered art machines.

Troubleshooting

Here are solutions to some common problems when setting up DALL-E on Raspberry Pi:

1. No Image Generated

- Double check prompts are formatted correctly for the model

- Validate model assets downloaded fully without corruption

- Ensure GPU libraries like CUDA are installed correctly

2. Images Generated are Low Resolution

- The model file may have lower resolution embeddings

- Try a different model version with higher resolution configs

3. Model Inference is Very Slow

- Use a Raspberry Pi 4 with higher RAM quantity

- Close unnecessary background processes

- Check CPU/GPU load for spikes during inference

- Incorporate active cooling solution to prevent thermal throttling

4. Cannot Access Raspberry Pi Remotely

- Verify SSH server is enabled on the device

- Check IP addresses, firewall rules and internet connectivity

- Re-image SD card and reconfigure if issues persist

Conclusion

Setting up DALL-E for AI art generation on a Raspberry Pi unlocks exciting creative potential that takes advantage of artificial intelligence in a maker friendly package.

Following the step-by-step guide detailed here allows DIY enthusiasts of all skill levels to leverage advanced deep learning, accomplishing results previously out of reach. While basic setups work well, the possibilities expand even further when combining Raspberry Pi flexibility with model customization, hardware peripherals and config optimizations.

So power up your Pi and transform imagined art concepts into real visual results with DALL-E today!

FAQs

- What hardware is required beyond the Raspberry Pi?

The only requirement beyond the Raspberry Pi itself is a microSD card for the operating system. For any display output, a monitor with HDMI input and cables would be needed. - What kind of images can DALL-E generate on the Pi?

DALL-E is capable of generating incredibly diverse art, photos and illustrations solely based on the text description you provide to the model. - How fast are images created?

On a Raspberry Pi 4 with 4GB RAM, a simple 512×512 image takes about 35 seconds but this varies dramatically based on complexity. - How many images can be created successively?

The number of successive images will be limited by available RAM, but generating 5-10 images consecutively is very feasible before hitting hardware constraints. - Do model versions differ significantly in quality or speed?

Yes, DALL-E models are always being iterated on. Newer versions trained on more data provide noticeable boosts but have higher system requirements. - Which model file is recommended?

As of early 2023, the DALL-E Mega file provides a great blend of quality, reasonable resource requirements and accessibility for enthusiasts. - What kind of customization is supported?

Models can be fine-tuned on new datasets tailored to specific art styles. This allows generating images better matched to personal preferences. - What are the most common issues encountered?

Insufficient RAM allocation, thermal throttling, and corrupted model downloads impact ability to load and inference models properly. Using a heatsink helps regulate temperatures. - What peripherals can be added to enhance capabilities?

Cameras, displays, sensors, input buttons, and even microcontrollers like Arduinos can build out an automated art generation showcase. - Can DALL-E on a Pi be accessed from the internet?

Yes, using port forwarding rules it is possible to access the DALL-E API from the internet similar to other cloud hosted solutions

. - Is setting up containerization worthwhile?

Container platforms like Docker allow easily saving configured instances that encapsulate dependencies, helpful when migrating across different hardware frequently. - What are best practices when providing text prompts?

sing concise and unambiguous descriptive statements generally works best. You can chain prompts together for variations or added specificity too. - What types of input images work best for fine tuning?

For fine tuning you’ll want a structured dataset of at least a few hundred high quality images that exemplify the desired art genre or style. - How long does fine tuning a model take?

Fine tuning requires training compute for hundreds of iteration cycles so runtimes measured in days rather than minutes or hours should be expected when refining models, even on accelerated hardware. - Is generating adult or dangerous content blocked?

Yes, DALL-E models have strict content moderation policies enforced by OpenAI before public distribution. Additional local filtering may be self-imposed as well. - What other AI models are compatible?

Services like Stable Diffusion, Parti, and Imagen also release public demos suitable for running locally on capable hardware like the Pi. These provide alternatives to DALL-E when generating images. - Can animation or video be produced?

The standalone DALL-E model focuses specifically on static images. To produce animation or video, additional machine learning workflows would need to be constructed using the images as input frames. - What risks around AI art should be considered?

Ethical concerns like copyright of assets used for training datasets are still being unpacked. Additionally, biases perpetuated by the data may be propagated. Checking licenses and model documentation helps navigate these issues.